A couple weeks ago, we had a kerfuffle here on Hackaday: A writer put out a piece with AI-generated headline art. It was, honestly, pretty good, but it was also subject to all of the usual horrors that get generated along the way. If you have played around with any of the image generators you know the AI-art uncanny style, where it looks good enough at first glance, but then you notice limbs in the wrong place if you look hard enough. We replaced it shortly after an editor noticed.

The story is that the writer couldn’t find any nice visuals to go with the blog post, with was about encoding data in QR codes and printing them out for storage. This is a problem we have frequently here, actually. When people write up a code hack, for instance, there’s usually just no good image to go along with it. Our writers have to get creative. In this case, he tossed it off to Stable Diffusion.

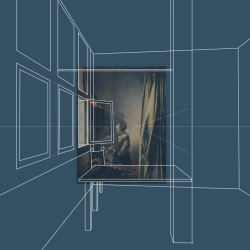

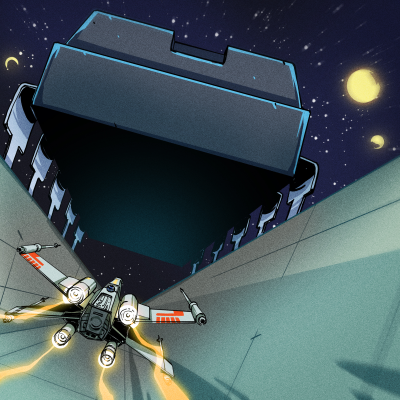

Some commenters were afraid that this meant that we were outsourcing work from our fantastic, and very human, art director Joe Kim, whose trademark style you’ve seen on many of our longer-form original articles. Of course we’re not! He’s a genius, and when we tell him we need some art about topics ranging from refining cobalt to Wimshurst machines to generate static electricity, he comes through. I think that all of us probably have wanted to make a poster out of one or more of his headline art pieces. Joe is a treasure.

Some commenters were afraid that this meant that we were outsourcing work from our fantastic, and very human, art director Joe Kim, whose trademark style you’ve seen on many of our longer-form original articles. Of course we’re not! He’s a genius, and when we tell him we need some art about topics ranging from refining cobalt to Wimshurst machines to generate static electricity, he comes through. I think that all of us probably have wanted to make a poster out of one or more of his headline art pieces. Joe is a treasure.

But for our daily blog posts, which cover your works, we usually just use a picture of the project. We can’t ask Joe to make ten pieces of art per day, and we never have. At least as far as Hackaday is concerned, AI-generated art is just as good as finding some cleared-for-use clip art out there, right?

Except it’s not. There is a lot of uncertainty about the data that the algorithms are trained on, whether the copyright of the original artists was respected or needed to be, ethically or legally. Some people even worry that the whole thing is going to bring about the end of Art. (They worried about this at the introduction of the camera as well.) But then there’s also the extra limbs, and AI-generated art’s cliche styles, which we fear will get old and boring after we’re all saturated with them.

So we’re not using AI-generated art as a policy for now, but that’s not to say that we don’t see both the benefits and the risks. We’re not Luddites, after all, but we are also in favor of artists getting paid for their work, and of respect for the commons when people copyleft license their images. We’re very interested to see how this all plays out in the future, but for now, we’re sitting on the sidelines. Sorry if that means more headlines with colorful code!