So far, food for astronauts hasn’t exactly been haute cuisine. Freeze-dried cereal cubes, squeezable tubes filled with what amounts to baby food, and meals reconstituted with water from a fuel cell don’t seem like meals to write home about. And from the sound of research into turning asteroids into astronaut food, things aren’t going to get better with space food anytime soon. The work comes from Western University in Canada and proposes that carbonaceous asteroids like the recently explored Bennu be converted into edible biomass by bacteria. The exact bugs go unmentioned, but when fed simulated asteroid bits are said to produce a material similar in texture and appearance to a “caramel milkshake.” Having grown hundreds of liters of bacterial cultures in the lab, we agree that liquid cultures spun down in a centrifuge look tasty, but if the smell is any indication, the taste probably won’t live up to expectations. Still, when a 500-meter-wide chunk of asteroid can produce enough nutritionally complete food to sustain between 600 and 17,000 astronauts for a year without having to ship it up the gravity well, concessions will likely be made. We expect that this won’t apply to the nascent space tourism industry, which for the foreseeable future will probably build its customer base on deep-pocketed thrill-seekers, a group that’s not known for its ability to compromise on creature comforts.

mathematics30 Articles

Print Yourself Penrose Wave Tiles As An Excellent Conversation Starter

Ah, tiles. You can get square ones, and do a grid, or you can get fancier shapes and do something altogether more complex. By and large though, whatever pattern you choose, it will normally end up repeating on some scale or other. That is, unless you go with something like a Penrose Wave Tile. Discovered by mathematician Roger Penrose, they never exactly repeat, no matter how you lay them out.

[carterhoefling14] decided to try and create Penrose tiles at home—with a 3D printer being the perfect route to do it. Creating the tiles was simple—the first step was to find a Penrose pattern image online, which could then be used as the basis to design the 3D part in Fusion 360. From there, the parts were also given an inner wave structure to add further visual interest. The tiles were then printed to create a real-world Penrose tile form.

You could certainly use these Penrose tiles as decor, though we’d make some recommendations if you’re going that path. For one, you’ll want to print them in a way that optimizes for surface quality, as post-processing is time consuming and laborious. If you’re printing in plastic, probably don’t bother using these as floor tiles, as they won’t hold up. Wall tiles, though? Go nuts, just not as a splashback or anything. Keep it decorative only.

You can learn plenty more about Penrose tiling if you please. We do love a bit of maths around these parts, too. If you’ve been making your own topological creation, don’t hesitate to drop us a line.

Ask Hackaday: How Can We Leverage Tech For Education?

If you’re like us, you’ve studied the mathematician [Euler], but all you really remember is that you pronounce his name like “oiler” and not much else. [Welch Labs], on the other hand, not only remembers what he learned about logarithms and imaginary numbers but also has a beautiful video with helpful 3D graphics to explain the concepts.

This post, however, isn’t about that video. If you are interested in math, definitely watch it. It’s great. But it also got us thinking. What would it be like to be a high school math student today? In our day, we were lucky to have some simple 2D graph to explain concepts. Then it hit us: it probably is exactly the same.

Continue reading “Ask Hackaday: How Can We Leverage Tech For Education?”

Manually Computing Logarithms To Grok Calculators

Logarithms are everywhere in mathematics and derived fields, but we rarely think about how trigonometric functions, exponentials, square roots and others are calculated after we punch the numbers into a calculator of some description and hit ‘calculate’. How do we even know that the answer which it returns is remotely correct? This was the basic question that [Zachary Chartrand] set out to answer for [3Blue1Brown]’s Summer of Math Exposition 3 (SoME-3). Inspired by learning to script Python, he dug into how such calculations are implemented by the scripting language, which naturally led to the standard C library. Here he found an interesting implementation for the natural algorithm and the way geometric series convergence is sped up.

The short answer is that fundamental properties of these series are used to decrease the number of terms and thus calculations required to get a result. One example provided in the article reduces the naïve approach from 36 terms down to 12 with some optimization, while the versions used in the standard C library are even more optimized. This not only reduces the time needed, but also the memory required, both of which makes many types of calculations more feasible on less powerful systems.

Even if most of us are probably more than happy to just keep mashing that ‘calculate’ button and (rightfully) assume that the answer is correct, such a glimpse at the internals of the calculations involved definitely provides a measure of confidence and understanding, if not the utmost appreciation for those who did the hard work to make all of this possible.

Fourier, The Animated Series

We’ve seen many graphical and animated explainers for the Fourier series. We suppose it is because it is so much fun to create the little moving pictures, and, as a bonus, it really helps explain this important concept. Even if you already understand it, there’s something beautiful and elegant about watching a mathematical formula tracing out waveforms.

[Andrei Ciobanu] has added his own take to the body of animations out there — or, at least, part one of a series — and we were impressed with the scope of it. The post starts with the basics, but doesn’t shy away from more advanced math where needed. Don’t worry, it’s not all dull. There’s mathematical flowers, and even a brief mention of Pink Floyd.

The Fourier series is the basis for much of digital signal processing, allowing you to build a signal from the sum of many sinusoids. You can also go in reverse and break a signal up into its constituent waves.

We were impressed with [Andrei’s] sinusoid Tetris, and it appears here, too. We’ve seen many visualizers for this before, but each one is a little different.

Random Number Generation By Brain

If you want to start an argument in certain circles, claim to have a random number generation algorithm. Turns out that producing real random numbers is hard, which is why people often turn to strange methods and still, sometimes, don’t get it right. [Hillel Wayne] wanted to get a “good enough” method that could be done without a computer and found the answer in an old Usenet post from random number guru [George Marsaglia].

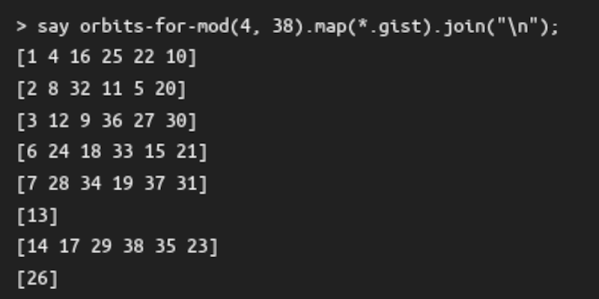

The algorithm is simple. Pick a two-digit number — ahem — at random. OK, so you still have to pick a starting number. To get the next number, take the top digit, add six, and then multiply by the bottom digit. So in C: n1=(n/10+6)*(n%10). Then use the last digit as your random number from 0 to 9. Why does it work? To answer that, the post shows some Raku code to investigate the behavior.

In particular, where does the magic number 6 come into play? The computer program notes that not any number works well there. For example, if you used 4 instead of 6 and then started with 13, all your random digits would be 3. Not really all that random! However, 6 is just a handy number. If you don’t mind a little extra math, there are better choices, like 50.

If you think humans are good at picking random numbers, ask someone to pick a number between 1 and 4 and press them to do it quickly. Nearly always (nearly) they will pick 2. However, don’t be surprised when some people pick 141. Not everyone does well under pressure.

If you want super random numbers, try a lava lamp. Or grab some 555s and a few Nixie tubes.

50-Year-Old Program Gets Speed Boost

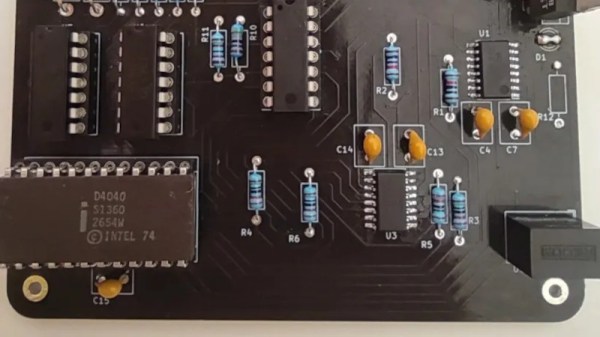

At first glance, getting a computer program to run faster than the first electronic computers might seem trivial. After all, most of us carry enormously powerful processors in our pockets every day as if that’s normal. But [Mark] isn’t trying to beat computers like the ENIAC with a mobile ARM processor or other modern device. He’s now programming with the successor to the original Intel integrated circuit processor, the 4040, but beating the ENIAC is still little more complicated than you might think with a processor from 1974.

For this project, the goal was to best the 70-hour time set by ENIAC for computing the first 2035 digits of pi. There are a number of algorithms for performing this calculation, but using a 4-bit processor and an extremely limited memory of only 1280 bytes makes a number of these methods impossible, especially with the self-imposed time limit. The limited instruction set is a potential bottleneck as well with these early processors. [Mark] decided to use [Fabrice Bellard]’s algorithm given these limitations. He goes into great detail about the mathematics behind this method before coding it in JavaScript. Generating assembly language from a working JavaScript was found to be fairly straightforward.

[Mark] is also doing a lot of work on the 4040 to get this program running as well, including upgrades to the 40xx tool stack, the compiler and linker, and an emulator he’s using to test his program before sending it to physical hardware. The project is remarkably well-documented, including all of the optimizations needed to get these antique processors running fast enough to beat the ENIAC. We won’t spoil the results for you, but as a hint to how it worked out, he started this project using the 4040 since his original attempt using a 4004 wasn’t quite fast enough.