Why is it always a helium leak? It seems whenever there’s a scrubbed launch or a narrowly averted disaster, space exploration just can’t get past the problems of helium plumbing. We’ve had a bunch of helium problems lately, most famously with the leaks in Starliner’s thruster system that have prevented astronauts Butch Wilmore and Suni Williams from returning to Earth in the spacecraft, leaving them on an extended mission to the ISS. Ironically, the launch itself was troubled by a helium leak before the rocket ever left the ground. More recently, the Polaris Dawn mission, which is supposed to feature the first spacewalk by a private crew, was scrubbed by SpaceX due to a helium leak on the launch tower. And to round out the helium woes, we now have news that the Peregrine mission, which was supposed to carry the first commercial lander to the lunar surface but instead ended up burning up in the atmosphere and crashing into the Pacific, failed due to — you guessed it — a helium leak.

Continue reading “Hackaday Links: September 1, 2024”

generative AI7 Articles

What If The Matrix Was Made In The 1950s?

We’ve noticed a recent YouTube trend of producing trailers for shows and movies as if they were produced in the 1950s, even when they weren’t. The results are impressive and, as you might expect, leverage AI generation tools. While we enjoy watching them, we were especially interested in [Patrick Gibney’s] peek behind the curtain of how he makes them, as you can see below. If you want to see an example of the result first, check out the second video, showing a 1950s-era The Matrix.

Of course, you could do some of it yourself, but if you want the full AI experience, [Patrick] suggests using ChatGPT to produce a script, though he admits that if he did that, he would tweak the results. Other AI tools create the pictures used and the announcer-style narration. Another tool produces cinematographic shots that include the motion of the “actors” and other things in the scene. More tools create the background music.

Continue reading “What If The Matrix Was Made In The 1950s?”

Wrencher-2: A Bold New Direction For Hackaday

Over the last year it’s fair to say that a chill wind has blown across the face of the media industry, as the prospect emerges that many content creation tasks formerly performed by humans instead being swallowed up by the inexorable rise of generative AI. In a few years we’re told, there may even be no more journalists, as the computers become capable of keeping your news desires sated with the help of their algorithms.

Here at Hackaday, we can see this might be the case for a gutter rag obsessed with celebrity love affairs and whichever vegetable is supposed to cure cancer this week, but we continue to believe that for quality coverage of the latest and greatest in the hardware hacking world, you can’t beat a writer made of good old-fashioned meat. Indeed, in a world saturated by low-quality content, the opinions of smart and engaged writers become even more valuable. So we’ve decided to go against the trend, by launching not a journalist powered by AI, but an AI powered by journalists.

Announcing Wrencher-2, a Hackaday chat assistant in your browser

Wrencher-2 is a new paradigm in online chat assistants, eschewing generative algorithms in favour of the collective expertise of the Hackaday team. Ask Wrencher-2 a question, and you won’t get a vague and made-up answer from a computer, instead you’ll get a pithy and on-the-nail answer from a Hackaday staffer. Go on – try it! Continue reading “Wrencher-2: A Bold New Direction For Hackaday”

Generative AI Now Encroaching On Music

While it might not seem like it to a novice, music turns out to be a highly mathematical endeavor with precise ratios between chords and notes as well as overall structure of rhythm and timing. This is especially true of popular music which has even more recognizable repeating patterns and trends, making it unfortunately an easy target for modern generative AI which is capable of analyzing huge amounts of data and creating arguably unique creations. This one, called Suno, does just that for better or worse.

Unlike other generative AI offerings that are currently available for creating music, this one is not only capable of generating the musical underpinnings of the song itself but can additionally create a layer of intelligible vocals as well. A deeper investigation of the technology by Rolling Stone found that the tool uses its own models to come up with the music and then offloads the text generation for the vocals to ChatGPT, finally using the generated lyrics to generate fairly convincing vocals. Like image and text generation models that have come out in the last few years, this has the potential to be significantly disruptive.

While we’re not particularly excited about living in a world where humans toil while the machines create art and not the other way around, at best we could hope for a world where real musicians use these models as tools to enhance their creativity rather than being outright substitutes, much like ChatGPT itself currently is for programmers. That might be an overly optimistic view, though, and only time will tell.

Creators Can Fight Back Against AI With Nightshade

If an artist were to make use of a piece of intellectual property owned by a large tech company, they risk facing legal action. Yet many creators are unhappy that those same tech companies are using their IP on a grand scale in the form of training material for generative AI. Can they fight back?

Perhaps now they can, with Nightshade, from a team at the University of Chicago. It’s a piece of software for Windows and MacOS that poisons an image with imperceptible shading, to make an AI classify it in an entirely different way than it appears.

The idea is that creators use it on their artwork, and leave it for unsuspecting AIs to assimilate. Their example is that a picture of a cow might be poisoned such that the AI sees it as a handbag, and if enough creators use the software the AI is forever poisoned to return a picture of a handbag when asked for one of a cow. If enough of these poisoned images are put online then the risks of an AI using an online image become too high, and the hope is that then AI companies would be forced to take the IP of their source material seriously.

For this to work it depends on enough creators taking up and using the software, but we are guessing that an inevitable result will be an arms race between AIs and image poisoners. One thing is certain though, as the AI hype has fueled such a growth in generative AI systems, creators, whether they be major publishers, your favourite human-generated tech news website, or someone drawing a cartoon strip in their bedroom, deserve not to have their work stolen in this way.

Multi-View Wire Art Meets Generative AI

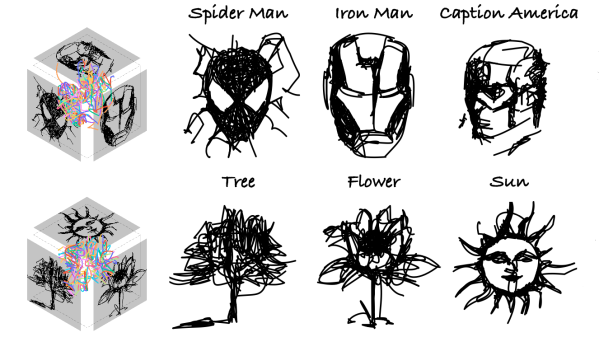

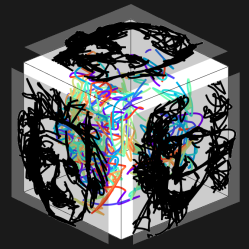

DreamWire is a system for generating multi-view wire art using machine learning techniques to help generate the patterns required.

What’s wire art? It’s a three-dimensional twisted mass of lines which, when viewed from a certain perspective, yields an image. Multi-view wire art produces different images from the same mass depending on the viewing angle, and as one can imagine, such things get very complex, very quickly.

A recently-released paper explains how the system works, explaining the role generative AI plays in being uniquely suited to create meaningful intersections between multiple inputs. There’s also a video (embedded just under the page break) that showcases many of the results researchers obtained.

The GitHub repository for the project doesn’t have much in it yet, but it’s a good place to keep an eye on if you’re interested in what comes next.

We’ve seen generative AI applied in a similarly novel way to help create visual anagrams, or 2D patterns that can be interpreted differently based on a variety of orientations and permutations. These sorts of systems still need to be guided by a human, but having machine learning do the heavy lifting allows just about anybody to explore their creativity.

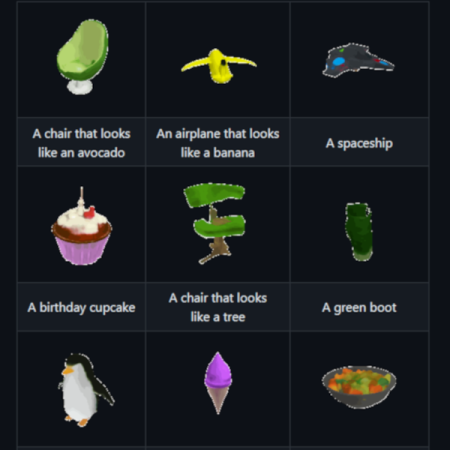

3D Design With Text-Based AI

Generative AI is the new thing right now, proving to be a useful tool both for professional programmers, writers of high school essays and all kinds of other applications in between. It’s also been shown to be effective in generating images, as the DALL-E program has demonstrated with its impressive image-creating abilities. It should surprise no one as this type of AI continues to make in-roads into other areas, this time with a program from OpenAI called Shap-E which can render 3D images.

Like most of OpenAI’s offerings, this takes plain language as its input and can generate relatively simple 3D models with this text. The examples given by OpenAI include some bizarre models using text prompts such as a chair shaped like an avocado or an airplane that looks like a banana. It can generate textured meshes and neural radiance fields, both of which have various advantages when it comes to available computing power, training methods, and other considerations. The 3D models that it is able to generate have a Super Nintendo-style feel to them but we can only expect this technology to grow exponentially like other AI has been doing lately.

For those wondering about the name, it’s apparently a play on the 2D rendering program DALL-E which is itself a combination of the names of the famous robot WALL-E and the famous artist Salvador Dali. The Shap-E program is available for anyone to use from this GitHub page. Even though this code comes from OpenAI themselves, plenty are speculating that the AI revolution to come will largely come from open-source sources rather than OpenAI or Google, something for which the future is somewhat hazy.