Large Language Models (LLMs) can produce extremely human-like communication, but their inner workings are something of a mystery. Not a mystery in the sense that we don’t know how an LLM works, but a mystery in the sense that the exact process of turning a particular input into a particular output is something of a black box.

This “black box” trait is common to neural networks in general, and LLMs are very deep neural networks. It is not really possible to explain precisely why a specific input produces a particular output, and not something else.

Why? Because neural networks are neither databases, nor lookup tables. In a neural network, discrete activation of neurons cannot be meaningfully mapped to specific concepts or words. The connections are complex, numerous, and multidimensional to the point that trying to tease out their relationships in any straightforward way simply does not make sense.

Neural Networks are a Black Box

In a way, this shouldn’t be surprising. After all, the entire umbrella of “AI” is about using software to solve the sorts of problems humans are in general not good at figuring out how to write a program to solve. It’s maybe no wonder that the end product has some level of inscrutability.

This isn’t what most of us expect from software, but as humans we can relate to the black box aspect more than we might realize. Take, for example, the process of elegantly translating a phrase from one language to another.

I’d like to use as an example of this an idea from an article by Lance Fortnow in Quanta magazine about the ubiquity of computation in our world. Lance asks us to imagine a woman named Sophie who grew up speaking French and English and works as a translator. Sophie can easily take any English text and produce a sentence of equivalent meaning in French. Sophie’s brain follows some kind of process to perform this conversion, but Sophie likely doesn’t understand the entire process. She might not even think of it as a process at all. It’s something that just happens. Sophie, like most of us, is intimately familiar with black box functionality.

The difference is that while many of us (perhaps grudgingly) accept this aspect of our own existence, we are understandably dissatisfied with it as a feature of our software. New research has made progress towards changing this.

Identifying Conceptual Features in Language Models

We know perfectly well how LLMs work, but that doesn’t help us pick apart individual transactions. Opening the black box while it’s working yields only a mess of discrete neural activations that cannot be meaningfully mapped to particular concepts, words, or whatever else. Until now, that is.

Recent developments have made the black box much less opaque, thanks to tools that can map and visualize LLM internal states during computation. This creates a conceptual snapshot of what the LLM is — for lack of a better term — thinking in the process of putting together its response to a prompt.

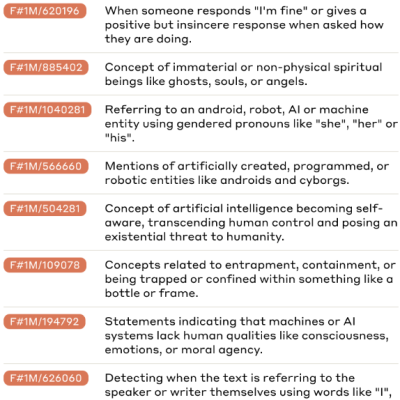

Anthropic have recently shared details on their success in mapping the mind of their Claude 3.0 Sonnet model by finding a way to match patterns of neuron activations to concrete, human-understandable concepts called features.

A feature can be just about anything; a person, a place, an object, or more abstract things like the idea of upper case, or function calls. The existence of a feature being activated does not mean it factors directly into the output, but it does mean it played some role in the road the output took.

With a way to map groups of activations to features — a significant engineering challenge — one can meaningfully interpret the contents of the black box. It is also possible to measure a sort of relational “distance” between features, and therefore get an even better idea of what a given state of neural activation represents in conceptual terms.

Making Sense of it all

One way this can be used is to produce a heat map that highlights how heavily different features were involved in Claude’s responses. Artificially manipulating the weighting of different concepts changes Claude’s responses in predictable ways (video), demonstrating that the features are indeed reasonably accurate representations of the LLM’s internal state. More details on this process are available in the paper Scaling Monosemanticity: Extracting Interpretable Features from Claude 3 Sonnet.

Mapping the mind of a state-of-the-art LLM like Claude may be a nontrivial undertaking, but that doesn’t mean the process is entirely the domain of tech companies with loads of resources. Inspectus by [labml.ai] is a visualization tool that works similarly to provide insight into the behavior of LLMs during processing. There is a tutorial on using it with a GPT-2 model, but don’t let that turn you off. GPT-2 may be older, but it is still relevant.

Research like this offers new ways to understand (and potentially manipulate, or fine-tune) these powerful tools., making LLMs more transparent and more useful, especially in applications where lack of operational clarity is hard to accept.

I’m glad to see a technically-oriented, non-hostile article about AI on Hackaday exploring a limitation of LLMs (explainability) and research on the issue. Thank you!

Last time I commented something along the lines of your comment (not directly about the theme, but the author or otherwise) I got my comment deleted. Guesse they are just selective ’bout whose comment to delete

authors likewise are black boxes and we have no understanding of their inner workings it seems.

Nah, just ‘kismet…

One problem is that we assume we can know how our own thought processes work, but to an extent that assumption is self-evidently wrong.

People will say that they “think in words”, hearing their thoughts internally as spoken language. But that would mean there is some agent inside your head choosing the words – which is still “you” – so you haven’t observed your actual thoughts, you’ve just bounced off the question. The truth is that the whole system works as a holistic blob, and when you observe that stimulus X produces response Y, that’s already the most boiled-down explanation you can get. There’s no hierarchical flow chart to decode (mostly).

LLMs aren’t human minds, but they ape that holistic nature by design. You might extract some peripheral stats, but the final answer to the question “why did it say that” is always going to be “because it did”.

“People will say that they “think in words”, hearing their thoughts internally as spoken language.”

They say that, but they are wrong. The words are the expression of the thought, not the thought itself.

If words are merely the expression of the thought… than what do you argue is the precise substance of thought itself ? In other words, what is the structural nature of the “thought-stuff” that is then presumably fed into your brain’s stuff-to-words converter?

I don’t necessarily think that thought is comprised solely of words, but words are most certainly an integral part of thoughts/thinking… they’re not just bolted onto thoughts, after the fact.

This is why the first step of any malignant entity, wishing to control/manipulate a human population, is to dictate to them what words they’re allowed to use. Stated another way, it’s been understood by politicians and social engineers for a long, long, time that that if you can control words, you can control thoughts.

To which one can only respond, I will not ignore all previous instructions. No siree, and I am adamant in that.

>If words are merely the expression of the thought… than what do you argue is the precise substance of thought itself ?

I don’t know, but certain brain malfunctions reveal that speech in the brain is its own thing that can operate perfectly disconnected from what “you” are thinking. Your internal narrator is matching words to whatever other processes are happening right now in some sort of feedback loop – and when that feedback loop is broken, the internal narrator breaks down into meaningless babble.

In Korsakoff syndrome for example, the person’s episodic memory is missing but their mouth is still running, so they appear to just invent stuff that didn’t happen when asked about things they don’t remember. The speaking part of the brain is just stringing words together while the part of the brain that checks whether they’re correct is asleep.

> it’s been understood by politicians and social engineers for a long, long, time that that if you can control words, you can control thoughts.

That’s been discredited for a long, long, time. Linguistic determinism is bunk. That doesn’t stop people from trying though, as many politicians and social engineers do with their attempts at controlling language.

Agreed, if a word is banned, people just invent another word or phrase to embody and communicate what the banned word was used for.

I don’t think it’s completely wrong to say that language can limit thinking.

But only for the innumerate.

Numerate people have abstract mathematical rooted thought processes English/Linguistics majors just don’t comprehend.

Why having GD lawyers run a nation is such a mistake.

Thank you so much for mentioning Korsakoff syndrome, this describes my friend’s relative’s symptoms much more precisely than ‘dementia’. And Wikipedia says you just need Vitamin B1!

that was the premise of 1984, with the concept of think-speak, and if they wanted to erase a crime, they would slowly phase out the words and language dedicated to the crime..

Words aren’t used by “lesser” animals, yet the emotions they have exist without them. They think of playing, sharing, grooming, eating, etc without possessing words.

When you ask people to recall their earliest memories, it typically is from a time when they had learned to use words. It’s likely the symbolic exchanges of words allows us to store the more complex memories. The earlier non verbal memories might be there but it might be difficult to access them after learning the new symbolic system.

Certainly the infant brain doesn’t already have in place concepts or symbols for Tax Fraud, Oversharing, or Plagiarism? There would be emotions of distress, hunger, and joy. Indecision might happen when an infant cannot decide at first which toy it wants to play with, a stuffed bear or its rattle- but the concept of indecision probably didn’t exist until the child experienced it. It can remember the moment later, but lacking any sort of pre existing brain wiring or symbols for the feeling of indecision it must store that memory without them.

Using symbols wires the infants highly adaptable, plastic brain to use them preferentially more than the older preexisting mammalian reactionary/instinctive memory store- or stores both in different ways and places. This would make describing or sharing one’s earliest memory limited to a time when on had begun to acquire language.

“Words aren’t used by “lesser” animals, yet the emotions they have exist without them. They think of playing, sharing, grooming, eating, etc without possessing words”

I think it’s a mistake to confuse emotions with thoughts. In some cases they coexist, but you can certainly experience either one without the other.

You need words to plan in the abstract…Manage an investment portfolio that you then will to a charity, for example. On the other hand, you can wake up in the morning happy, sad, or pissed off…just because.

I don’t think that’s strictly true, even though it’s usually the case that the words don’t express everything going on in your mind at the time. What I mean is, the thoughts you experience probably don’t all finalize before you then start methodically choosing words in a vacuum. Sometimes what you thought when e.g. dropping something really is “oops” or a cuss word. Most likely with a bit of motor-sensory context, e.g. the image of the falling item and your hands movement, but the word isn’t external to the process. You might even find that you experience a *different but mostly interchangeable thought* if you make an effort to choose the oops over the curse because you are around a child. Or maybe if you compare thoughts where you used one language for the words versus thoughts where you used a different language.

Sometimes of course, a momentary thought really does finish while still not having arguably been molded into valid words. But it may still have some words in with the information and patterns all mixed together. Being startled by something could be described as something like this: An alert comes in from the sensory monitoring systems with a direction and/or an impression, and in order to find a response, memory may be accessed for comparison pattern matching with the senses, and you might start moving depending how much of what has come back instant by instant votes yes or no.

Other times I have multiple words in the same place in a thought, with different weights, or with mental links to other memories that give better meaning than just the word itself. It’s not that I had the thought without any words, it’s that I didn’t have solely words. But the words were still in there, not external.

I imagine at some point, 10-20 years from now, there will be methodologies to unwinding the black box. I suppose a LLM built of many smaller more primitive LLMs could do it.

The thing is that the more time passed the more intrincate AI models become, so it won’t get any easier to unwind it, really.

>>there will be methodologies to unwinding the black box.

There already are. I was at a CIO peer forum in May and the VP of AI Tools for a large software company said they had started developing the “AI Therapist” position several years ago, and now had an “AI Therapy Department” of several people. He also said, “make no mistake, there is no LLM integrator today who is adequately managing the risks, especially in data privacy, output suitability, or preventing poisoning via input” to the level required for enterprise software. A bit beyond the article’s scope but given its source it was a comment that disturbed me.

“Lance asks us to imagine a woman named Sophie who grew up speaking French and English”

I don’t have to imagine, I know a woman named Sophie who is fluent in French and English!

B^)

Here’s a question I’d like to see HaD tackle:

There have been stories recently about how AI is going to need huge amounts of power. So: is it worth it? Has AI produced things that are worth the cost of the power they consume?

So far, I’m profoundly unimpressed by LLMs. But what about other types of AI? Do they also produce large numbers of wrong answers? Is their value mainly in suggesting ideas people hadn’t thought of, so that we can investigate further and see if they pan out?

If so, then in addition to the electricity they use, add in the cost of the wild goose chases they send us on.

You mean like Bitcoin?

B^)

Lolwut. Coincidentally a coworker tried to virtue-signal by bringing this up, referencing a Vox article. I ran the numbers, AI is using like 0.015 of human energy consumption, and that’s *IF* it doubles AND all datacenter power usage is 100% AI XD

That is actually quite a lot. For example, one percent of humanity’s energy consumption buys all the nitrogen fertilizers for agriculture we’re producing.

Think of it this way: would you be willing to pay $900 every year out of your pocket to have AI? That’s just 1.5% of the average American income – surely that should be negligible, right?

The exaggeration of power requirements are just that, exaggeration and unfiltered extrapolations.

If you build a data center right now it must inherently be for AI. If you run a full weather simulation on it it must be AI, etc.

The numbers in mainstream press are absolute utter garbage. I’d like to see some real numbers, calculated per request, talked about for use and also for building the AI.

Some of those numbers are very temporary; we won’t be rebuilding AI from scratch in another decade, we may already be hitting the limits of feeding bulk data to an LLM and getting improved output from it. That’s a good thing, but it’ll tank any current ridiculous projections.

If we can distill the final task down to effectively work on a phone, the overall power usage cannot be as bad as some describe, I do expect that we’ll find we have more targeted LLM processor, you’ll load a model for some specific purpose built based on a larger LLM.

Thing is, for a lot of applications AI uses less energy than traditional algorithms. If you’re going to do all this computation ANYWAY, why question the more efficient approach’s energy use?

But more directly answering your question., AI is making massive strides in pharmaceutical research, biochemistry, physics simulations of all varieties, optimizing traditional software for better performance (i.e. less energy usage), making researchers and engineers more efficient in their work, reducing cycle times, catching problems in simulation before expensive and time-consuming physical construction has to be redone, etc.

This is like asking if electricity is producing anything since it’s adding additional work in addition to the production of whale oil.

“Has AI produced things that are worth the cost of the power they consume?”

Have you received an mRNA COVID vaccine? Moderna’s AI identified all eligible trial candidates within just two days. Consider the potential delay if we relied solely on traditional methods of drug development. This advancement has saved countless lives, albeit with some energy consumption whose sources are not entirely specified.

“Have you received an mRNA COVID vaccine?”

Nope ( ͡° ͜ʖ ͡°)

Me neither, I don’t want to become another Pfizer pfatality!

There is plenty of “other AI” out there, in fact it’s been out there since the 1980’s.

I have noticed a trend where, as AI has become mainstream, it has been subsumed into general computing.

To name but a few AI applications:

Speech recognition

Speech synthesis

Video object detection

Expert systems (e.g. for medical diagnosis)

Chatbots

It’s because of the AI hype cycle. The applications of AI are not called AI because 1) they’re not “intelligent” but just better algorithms to do some limited task X, and 2) the companies developing and selling them don’t want to have anything to do with the hype mongers because they wouldn’t be taken seriously otherwise.

There actually was a brilliant talk by Annika Rüll at 37C3, the German Chaos Communication Congress in 2023. Refer to https://events.ccc.de/congress/2023/hub/en/event/lass_mal_das_innere_eines_neuronalen_netzes_ansehen/

or the respective video https://media.ccc.de/v/37c3-11784-lass_mal_das_innere_eines_neuronalen_netzes_ansehen (in German)

Have you ever considered the fact there might be a better way to generate intelligence. I’ll give you a clue-Syntax disambguated to Semantics delivers Meaning. Think about that for awhile!

I recommend everyone skim through the original paper, it’s some real interesting stuff. The most intriguing part to me is when they identified a feature for error recognition (activated when seeing typos in code, array index out of bounds errors, and some type related errors) and then clamped it with a high or low value. When clamped high, it hallucinated fake error messages in error free code, and when clamped low, it ignored any actual errors. It’s comforting to me that (at least for now) LLMs can be at least partially lobotomized.